The former is a dissertation focusing entirely on sonority, including an eighty-six-page literature summary. Other general reviews of sonority include Parker 2002 and Parker 2011.

Zec 2007 situates the account of sonority effects within Optimality Theory, in keeping with the focus of that handbook. Goldsmith 2011 authors the discussion of syllables in its revision of this handbook, taking a more historical perspective. The first is Blevins 1995, couched in a generative framework. Three chapters in major phonology handbooks are cited in this section, each one noting the close connection between sonority and syllable structure. The domain in which sonority is most often invoked is the syllable. This finding suggests that the two sequences have different prosodic structures, a crucial detail that is often downplayed-if “exceptional” clusters such as /sp/ are not in fact tautosyllabic, then they actually confirm the sonority principle rather than violate it. For example, a study of Italian with electromagnetic articulography shows a difference in the coordination patterns of gestural targets for initial clusters such as /pr/ versus /sp/ (a sonority reversal). Cutting-edge technology has made a significant contribution in other related areas, too. This approach replaces sonority with perceptual constraints ranking phonological environments by their likelihood of assisting the hearer to recover critical aspects of the speech signal. The analysis of clusters in terms of well-configured sonority slopes has been rejected by some scholars in favor of an optimal ordering of segments to enhance their auditory cue robustness. Another important issue involves the functional explanation of sequencing tendencies. In this approach, sonority is a function of bidirectional excitation of competing segments across time, driven by global harmony maximization using exponentially weighted constraints. Also, connectionist networks have been used to automatically syllabify random strings of segments in Berber.

An exciting development is computational algorithms that can directly calculate the relative sonority of acoustic samples and potentially segment them, based on various phonetic parameters these algorithms have contributed to automated speech recognition. However, different studies counter that this knowledge can be acquired by extrapolating statistical generalizations from the lexicons of those languages, without a prior bias concerning preferred sonority differentials. For example, experiments asking speakers of various languages to rate the naturalness of or pronounce forms containing non-native clusters show that universal markedness constraints involving sonority predict accuracy on such tasks. Recent research on sonority has revived a debate about its innateness.

#Sonority plateaus how to

However, while generalizations of this kind are strong, some have counterexamples, raising questions about the adequacy of sonority and how to encode it grammatically. These observations have led to implicatures such as lower sonority nuclei entailing the existence of nuclei from all higher sonority classes in a particular language. Furthermore, the propensity for a segment to pattern as moraic is proportional to its sonority.

#Sonority plateaus plus

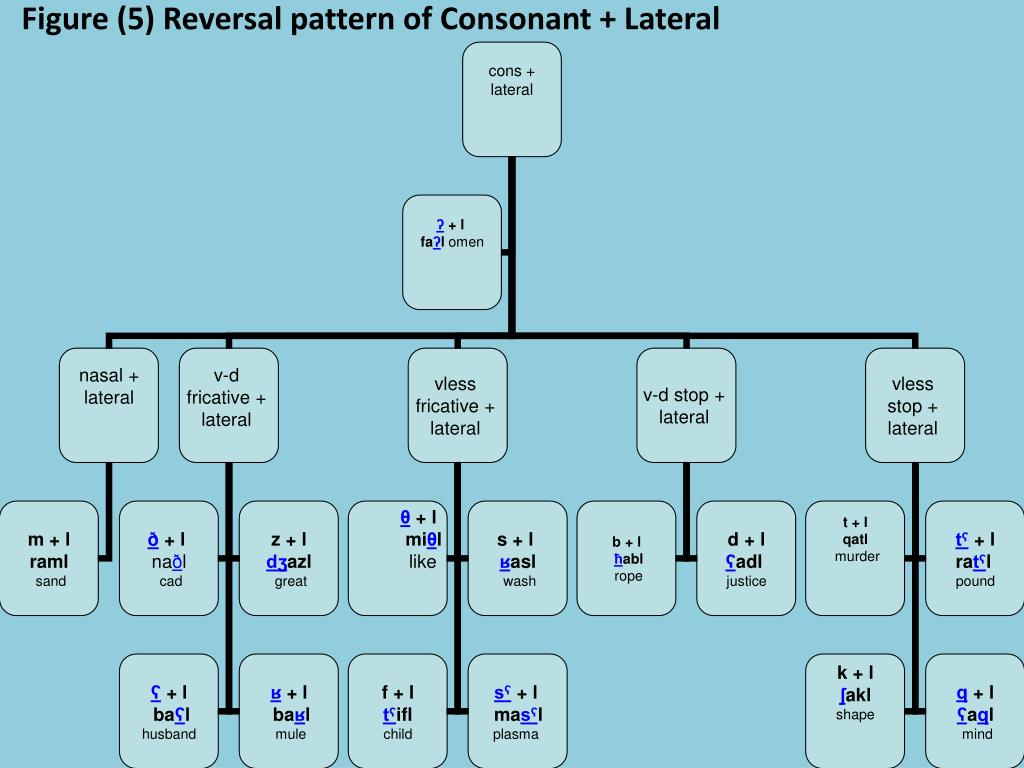

Thus onsets prototypically contain an obstruent plus an approximant. A primary function of sonority is to linearize segments within syllables: more sonorous sounds tend to occur more closely to the peak. The phonetic basis of sonority is contentious it is roughly but imperfectly correlated with loudness. Many versions of the sonority hierarchy exist a common one is vowels > glides > liquids > nasals > obstruents. Sonority is a nonbinary phonological feature categorizing sounds into a relative scale.

0 kommentar(er)

0 kommentar(er)